Humans have always excelled at recognizing faces. From early childhood, we learn to identify family members, friends, and unfamiliar people without conscious effort. Even under poor lighting, from unusual angles, or after decades of change, the human brain still manages to recognize faces reliably.

This skill helped people long ago stay safe and make friends. Knowing who belonged to your group and who did not made a big difference for survival.

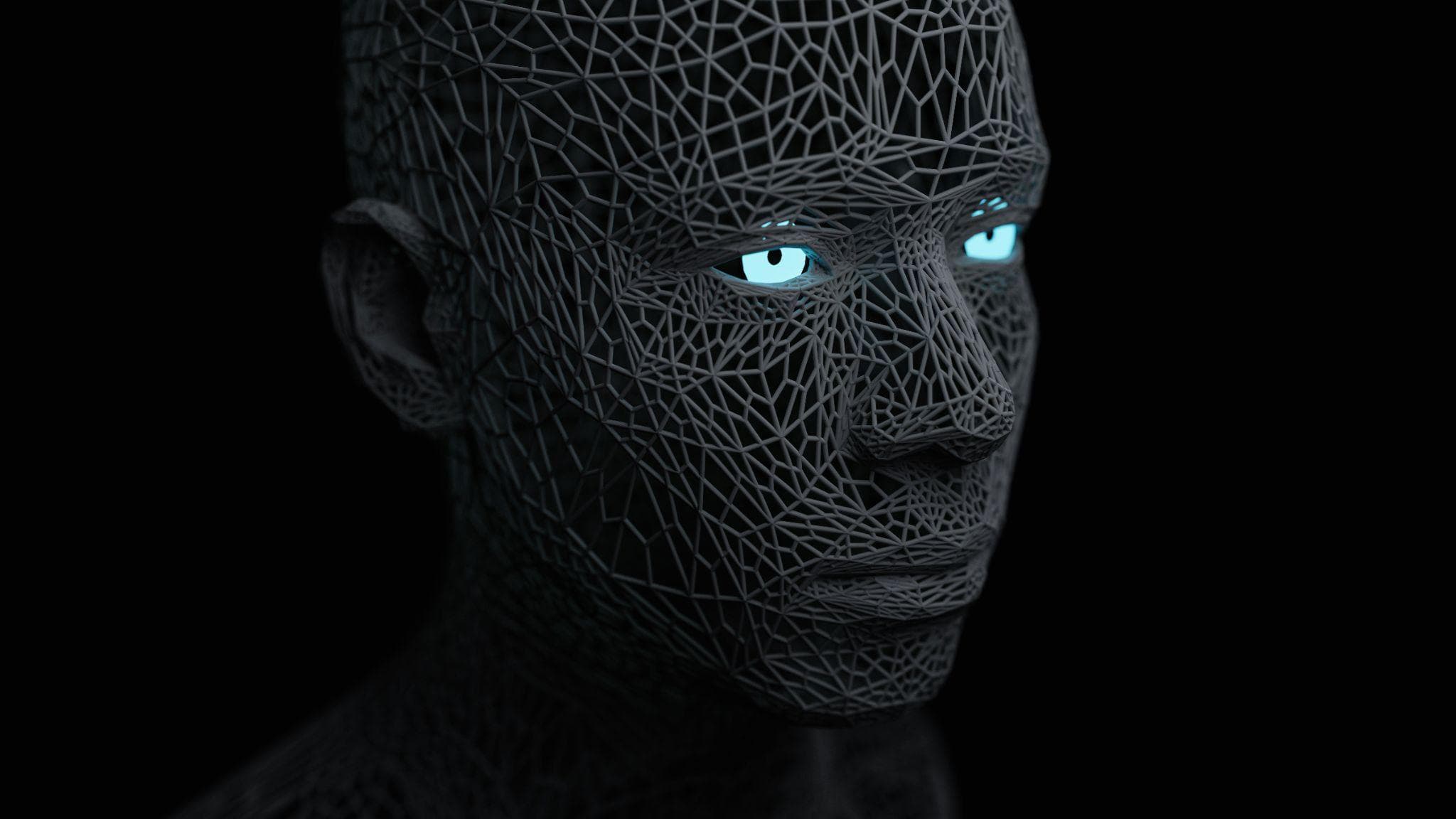

Machines struggled with this task for a long time. Cameras could record faces, but comprehension was entirely absent. Over the years, technology has worked to replicate how humans process facial recognition, and in some contexts, surpass it entirely.

How Facial Recognition Technology Evolved Through Distinct Phases

Early facial recognition systems operated with significant limitations. Initial approaches attempted to match faces by measuring basic features, including the distance between eyes or the contour of a nose. These methods only functioned under ideal conditions and collapsed quickly when variables changed.

Improved techniques emerged over time. Computers learned to compare faces through pattern analysis rather than isolated feature measurement. Results became more reliable, but fundamental constraints remained.

The meaningful transformation arrived when artificial intelligence reached sufficient capability. Contemporary systems learn from enormous collections of facial images. They do not simply store photographs for comparison. They develop an understanding of what distinguishes each face as unique.

Because of this foundational change, smart cameras now recognize individuals with genuine reliability, even when subjects move, rotate their heads, or present different appearances than previously recorded.

How Accuracy and Recognition Quality Reached Current Levels

Current facial recognition systems operate far beyond what earlier versions could achieve. They function effectively in low light, adverse weather, and densely populated environments. They maintain recognition accuracy when people wear glasses, hats, or alter their hairstyles significantly.

Modern systems also verify that an actual person stands before the camera. This capability prevents deception through photographs or video playback. Cameras examine movement patterns, depth information, and natural facial dynamics to confirm genuine presence.

Many smart cameras now execute this processing internally rather than transmitting data elsewhere. This architectural choice accelerates recognition and reduces dependency on external infrastructure. The practical outcome is higher accuracy with fewer errors disrupting the user experience.

How Smart Cameras Started Thinking Independently

Contemporary smart cameras possess processing capabilities that previous generations lacked entirely. Earlier systems sent video to remote servers for analysis. This created latency problems and made performance dependent on the internet connection quality.

Current cameras include specialized processors designed specifically for artificial intelligence workloads. These components enable instant facial recognition at the device level. Alerts reach users immediately without transmission delays affecting response times.

Some cameras allow users to label faces they want the system to remember. This training helps the camera understand who belongs in a particular environment and who does not. The system can then adjust its behavior based on who appears, making security more personalized and more effective for actual daily use.

Where Facial Recognition Shows Up Now

Facial recognition works in many different places today. In homes, smart cameras can tell who lives there and who does not. This stops the annoying false alerts that older motion cameras sent all the time. When the camera knows your face, it does not bother you every time you walk to the kitchen.

Businesses find this technology useful too. Stores use it to see how shoppers move around and what areas get the most attention. Offices let workers walk in just by showing their faces. No one needs to carry key cards or remember long codes anymore.

Public places have started using facial recognition as well. Airports check passengers faster with it. Hospitals keep track of who enters certain rooms. Bus and train stations use it to stay safe and keep things moving. Because the technology works well in so many settings, different industries keep finding new ways to use it.

How Privacy Worries Changed the Technology

As facial recognition spread to more places, people started asking important questions. They wanted to feel protected, but they did not want to feel watched everywhere they went.

Newer systems give users more choices about their own information. Face data can be saved right on the camera instead of being sent somewhere else online. When a face record is no longer needed, users can delete it. People get to pick how their cameras act in different situations.

The people who build these systems also want them to work fairly. They use better training methods, so the technology makes fewer mistakes. They test systems on many different faces to make sure results stay accurate for everyone. The goal is to help people stay safe while still respecting their privacy.